Hand Image Classification – Part 2 – Transfer Learning – Which Hand?

Introduction

In Part 1, we tackled the classification of the image of a hand to determine the number of fingers the hand is holding up. The journey we took from simple algorithms to more complex ones resulted in a Convolutional Neural Network (CNN) with the ability to perfectly classify the images of the hands it had not seen yet. In this article we would like to build an algorithm to classify whether the hand in an image is a left or right hand.

Since the images in this new problem are identical to the previous problem of the number of fingers, it is reasonable to assume that the features that are important in the previous problem are similar to the ones important to this new problem. The features typically get more complex the deeper the layer is in a Neural Network. Earlier layers may look at straight lines or edges and later layers may identify curves and fingers.

We can then re-use our well performing model in our new task. This is called Transfer Learning. Transfer Learning is an approach that keeps the earlier layers of an original model (and their corresponding weights) while adding new layers to the end (which we train). The one issue is that we will still need to train a portion (the last few layers) of the CNN because the CNN developed already was optimised according to different labels. We will see here that simply replacing the final, Softmax, layer of the CNN with a layer with a Sigmoid activation function will enable the Neural Network (NN) to learn which combinations of the features in the second to last layer indicate a left or right hand.

Imports

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | #import Directory # for keeping a solid directory structure. This is a local moduleimport numpy as npnp.random.seed(101) # set random seedimport PIL # for reading in the png filesfrom matplotlib import image # for displaying the image filesimport matplotlib.pyplot as pltimport pickle # for pickling the dataset so that it takes up less spaceimport bz2 # for compressing the dataset so that it takes up less spaceimport osimport time # to time some operationsfrom sklearn.linear_model import LogisticRegressionfrom sklearn import metricsimport tensorflow as tffrom tensorflow import kerasfrom tensorflow.keras import layersfrom tensorflow.keras.layers import Input,Dense,Activation,Dropout,Conv2D,Flatten,BatchNormalizationfrom tensorflow.keras.models import Model, load_modelfrom tensorflow.keras.utils import to_categorical%matplotlib inline |

Global variables

Here are some local directory paths we need. Notably to load the pickle file and the model as well as to save the model we create here. ‘Directory’ is a local module I use to better structure consistent project folders. If the Directory module doesn’t exist, absolute paths should be used in this code snippet.

1 2 3 4 5 6 | image_pickle_file = os.path.join(Directory.dataPath,'fingers_pickle.pkl.bzip')model_h5_save = os.path.join(Directory.outputPath,'finger_count_model.h5')model_h5_rlmodel_save = os.path.join(Directory.outputPath,'finger_count_model_rl.h5')# this dictionary is to hold the datasetsfingers_dataset = None |

Transfer Learning – Predicting left hand or right hand

Load the images

Here, we load the pickle file which contains the images. This pickle file was created during Part 1.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | # start timet0 = time.time()try: infile = bz2.open(image_pickle_file,'rb')except: print("Can't find pickle file: {}".format(image_pickle_file))else: print("Loading compressed pickle file...") fingers_dataset = pickle.load(infile) infile.close() print("Compressed pickle file load complete!") # print time takenprint(time.time() - t0, "seconds taken to run this cell") |

Load the model

We are going to load the model which was saved in the Part 1 and display the architecture.

1 2 3 4 | fingers_model = load_model(model_h5_save)# check the architecture of the modelfingers_model.summary() |

Model: "CNNModel_with_batch_norm" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_7 (InputLayer) [(None, 128, 128, 1)] 0 _________________________________________________________________ conv2d_2 (Conv2D) (None, 63, 63, 64) 1088 _________________________________________________________________ batch_normalization_3 (Batch (None, 63, 63, 64) 256 _________________________________________________________________ activation_5 (Activation) (None, 63, 63, 64) 0 _________________________________________________________________ conv2d_3 (Conv2D) (None, 31, 31, 32) 18464 _________________________________________________________________ batch_normalization_4 (Batch (None, 31, 31, 32) 128 _________________________________________________________________ activation_6 (Activation) (None, 31, 31, 32) 0 _________________________________________________________________ flatten_1 (Flatten) (None, 30752) 0 _________________________________________________________________ fc (Dense) (None, 6) 184518 ================================================================= Total params: 408,716 Trainable params: 204,262 Non-trainable params: 204,454

Transforming the data

Next, we get the data and encode ‘L’ and ‘R’ as integers; 1 for ‘L’ and 0 for ‘R’. We also reshape it to have a 4th dimension as 1 (remember that in Part 1 this 4th dimension was 6 corresponding to the classes ‘Zero’, ‘One’, ‘Two’, ‘Three’, ‘Four’, ‘Five’ fingers. So we need to be consistent with the input shape to the NN).

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | # getting the train and test setx_train_nn = fingers_dataset['np_train']['X'].copy()x_train_nn = x_train_nn.reshape((x_train_nn.shape[0],x_train_nn.shape[1],x_train_nn.shape[2],1))y_train_nn = y_train_handy_train_nn[y_train_nn == 'L'] = 1y_train_nn[y_train_nn == 'R'] = 0y_train_nn = y_train_nn.astype(int)x_test_nn = fingers_dataset['np_test']['X'].copy()x_test_nn = x_test_nn.reshape((x_test_nn.shape[0],x_test_nn.shape[1],x_test_nn.shape[2],1))y_test_nn = y_test_handy_test_nn[y_test_nn == 'L'] = 1y_test_nn[y_test_nn == 'R'] = 0y_test_nn = y_test_nn.astype(int) |

Customising the model

We have loaded the model we would like to re-use. The summary of it can be seen above in this article. What we want to do is replace the final Fully Connected layer, which does the actual classification, with our own layer which will do binary classification.

First, we will build the new layer based on the output of the second to last layer of the CNN. Then we make a new model using this layer as follows:

1 2 3 4 5 | # create a fully connected layers which is built on the penultimate layer from the old modelprev_model_output = Dense(1, activation='sigmoid', name='fc_lr')(fingers_model.layers[-2].output)# make this into a new modelhand_model = Model(inputs=fingers_model.input, outputs=prev_model_output, name='hand_model') |

Next, we need to ensure we are not training the layers we ported over from the previous model. Below, we set all but this new layer to be non-trainable. This will save us time since those layers have already been trained to recognise important features.

1 2 3 4 5 6 7 8 9 10 | # we don't want the layers to be trainable except the last onefor layer in hand_model.layers[:-1]: layer.trainable = False # compile the new modelhand_model.compile(optimizer='adam', loss='binary_crossentropy', metrics = ["accuracy"])# print to make sure the last layer is trainablefor layer in hand_model.layers: print(layer, layer.trainable) |

<tensorflow.python.keras.engine.input_layer.InputLayer object at 0x000002585A75ED30> False <tensorflow.python.keras.layers.convolutional.Conv2D object at 0x000002585A75EEB8> False <tensorflow.python.keras.layers.normalization.BatchNormalization object at 0x000002585A7BE1D0> False <tensorflow.python.keras.layers.core.Activation object at 0x000002585A848748> False <tensorflow.python.keras.layers.convolutional.Conv2D object at 0x000002585A848CF8> False <tensorflow.python.keras.layers.normalization.BatchNormalization object at 0x000002585A8781D0> False <tensorflow.python.keras.layers.core.Activation object at 0x000002585A878550> False <tensorflow.python.keras.layers.core.Flatten object at 0x000002585A8786A0> False <tensorflow.python.keras.layers.core.Dense object at 0x000002585D8E1390> True

We can now look at the architecture of this new model. Notice the final layer is now called ‘fc_lr’ which stands for Fully Connected Left – Right.

1 | hand_model.summary() |

Model: "hand_model" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_7 (InputLayer) [(None, 128, 128, 1)] 0 _________________________________________________________________ conv2d_2 (Conv2D) (None, 63, 63, 64) 1088 _________________________________________________________________ batch_normalization_3 (Batch (None, 63, 63, 64) 256 _________________________________________________________________ activation_5 (Activation) (None, 63, 63, 64) 0 _________________________________________________________________ conv2d_3 (Conv2D) (None, 31, 31, 32) 18464 _________________________________________________________________ batch_normalization_4 (Batch (None, 31, 31, 32) 128 _________________________________________________________________ activation_6 (Activation) (None, 31, 31, 32) 0 _________________________________________________________________ flatten_1 (Flatten) (None, 30752) 0 _________________________________________________________________ fc_lr (Dense) (None, 1) 30753 ================================================================= Total params: 50,689 Trainable params: 30,753 Non-trainable params: 19,936

Training the model

Now we train the model and check the predictions of the data set

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | # fit the model hand_model.fit(x_train_nn,y_train_nn,batch_size=32,epochs=5)# get the performance on the train and test setspreds = hand_model.evaluate(x_train_nn,y_train_nn,verbose=0)print('Train set accuracy:',preds[1])preds = hand_model.evaluate(x_test_nn,y_test_nn,verbose=0)print('Test set accuracy:',preds[1])# show the incorrectly classified imagespredictions = hand_model.predict(x_test_nn)predictions = tf.argmax(predictions, axis=1).numpy()actuals = tf.argmax(y_test_nn, axis=1).numpy()x_incorrectly_classified = x_test_nn[(predictions != actuals)]y_incorrectly_classified = np.argmax(y_test_nn[(predictions != actuals)],axis=-1)y_predicted = predictions[(predictions != actuals)]for i in range(len(y_predicted)): # display the image using the numpy array plt.imshow(x_incorrectly_classified[i].reshape(fingers_dataset['np_train']['X'].shape[1:])) plt.show() print('Prediction:{}, actual:{}'.format(y_predicted[i],y_incorrectly_classified[i])) |

Train on 18000 samples Epoch 1/5 18000/18000 [==============================] - 171s 10ms/sample - loss: 0.0289 - accuracy: 0.9909 Epoch 2/5 18000/18000 [==============================] - 143s 8ms/sample - loss: 7.2692e-04 - accuracy: 1.0000 Epoch 3/5 18000/18000 [==============================] - 145s 8ms/sample - loss: 3.1750e-04 - accuracy: 1.0000 Epoch 4/5 18000/18000 [==============================] - 146s 8ms/sample - loss: 1.7821e-04 - accuracy: 1.0000 Epoch 5/5 18000/18000 [==============================] - 145s 8ms/sample - loss: 1.1194e-04 - accuracy: 1.0000 Train set accuracy: 1.0 Test set accuracy: 1.0

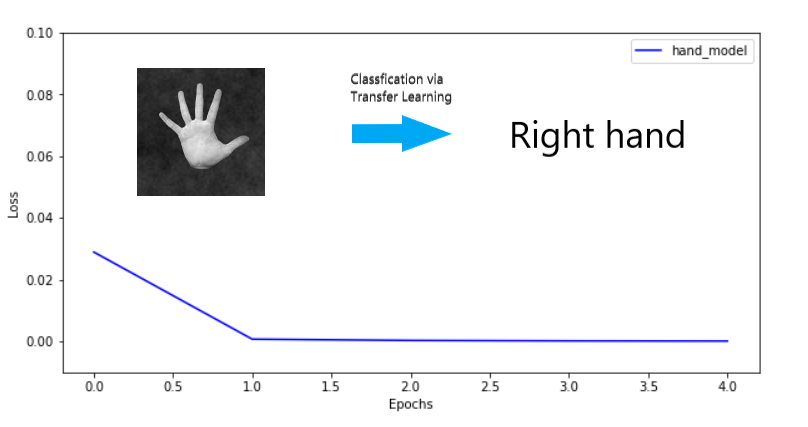

And we get a perfect test set accuracy. Additionally, we can see below at how quickly we reach perfect test accuracy (2 epochs)

1 2 3 4 5 6 7 8 9 10 11 | fig, axes = plt.subplots(nrows=1,sharex=True,figsize=(10,5))axes.plot(hand_model.history.history['loss'],label=hand_model.name,c='b')axes.set_ylim(-0.01,0.1)axes.legend()axes.set_xlabel('Epochs')axes.set_ylabel('Loss') |

Let’s save the model to be used later:

1 2 3 4 5 | # Save the modelhand_model.save(model_h5_rlmodel_save)# load the modelhand_model = load_model(model_h5_rlmodel_save) |

1 | hand_model.summary() |

Model: "hand_model" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_7 (InputLayer) [(None, 128, 128, 1)] 0 _________________________________________________________________ conv2d_2 (Conv2D) (None, 63, 63, 64) 1088 _________________________________________________________________ batch_normalization_3 (Batch (None, 63, 63, 64) 256 _________________________________________________________________ activation_5 (Activation) (None, 63, 63, 64) 0 _________________________________________________________________ conv2d_3 (Conv2D) (None, 31, 31, 32) 18464 _________________________________________________________________ batch_normalization_4 (Batch (None, 31, 31, 32) 128 _________________________________________________________________ activation_6 (Activation) (None, 31, 31, 32) 0 _________________________________________________________________ flatten_1 (Flatten) (None, 30752) 0 _________________________________________________________________ fc_lr (Dense) (None, 1) 30753 ================================================================= Total params: 50,689 Trainable params: 30,753 Non-trainable params: 19,936

Summary

We have seen how we can use the layers of a model that has already been built and optimised to apply it to a new classification task. The important point to note here is that it is always necessary to replace at least 1 layer when Transfer learning if the classification task is different from that which the original model was optimised for.

Next Time

In Part 3, I will take a few photos of my hand using my mobile phone and upload them to see if this model here can actually classify them with good accuracy. We will also test images which are taken differently to the images used here (i.e. with a white background, including the wrist etc…).